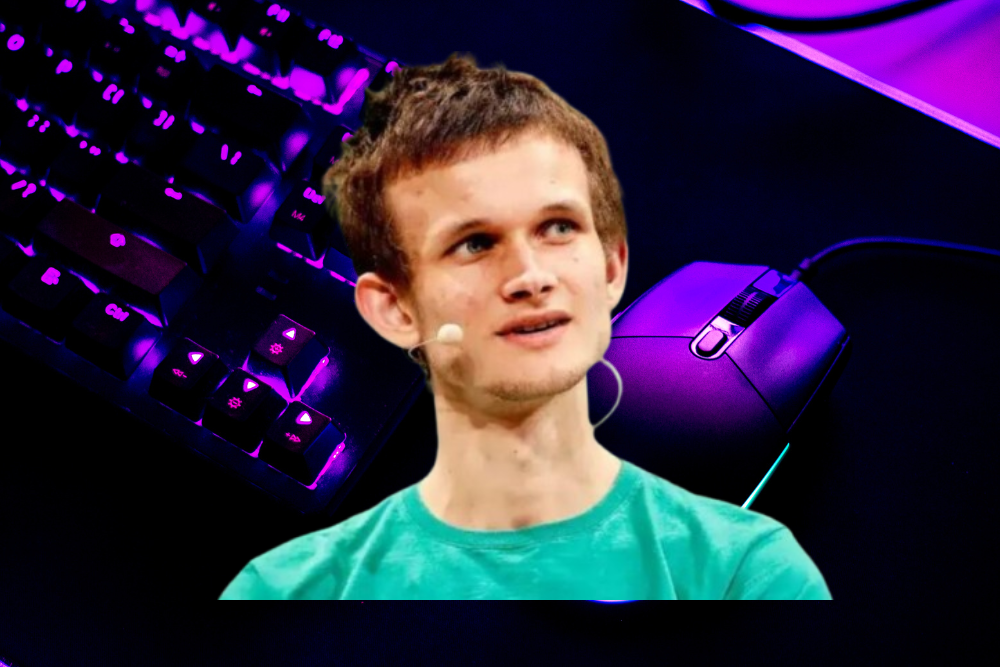

Vitalik Buterin, the co-founder of Ethereum, has introduced a thought-provoking proposal aimed at addressing the rapid advancements in artificial intelligence (AI). In his recent blog post, Buterin suggests that humanity should proactively prepare for the singularity—a point where AI surpasses human intelligence, potentially posing existential risks.

His proposal centers around a dramatic reduction in global computing power by up to 99% over the next few years. This drastic measure, he argues, would create a vital buffer period for society to adapt to the challenges posed by increasingly sophisticated AI systems.

Implementation Strategy: Regulating Computational Resources

Buterin’s plan involves a comprehensive strategy to monitor and control computing resources worldwide. Key components of this approach include Location-Based Verification Systems- These systems would ensure that computational resources are only accessible within designated areas, allowing for greater oversight of how and where AI-related activities are conducted.

Another essential measure involves mandatory hardware registration. By requiring all computing devices to be registered, authorities could track and regulate their use, minimizing the potential for misuse or unauthorized access to powerful computational tools.

Furthermore, he proposed integration of authorization chips. Industrial-scale computing devices would be equipped with specialized chips that require explicit authorization to operate. These chips would act as gatekeepers, effectively limiting the uncontrolled proliferation of high-performance hardware.

By implementing these measures, Buterin envisions a scenario where humanity can slow down the development of AI technologies, particularly if there are signs of near-superintelligent AI on the horizon.

Purpose: Gaining Time to Prepare for Super intelligent AI

The primary goal of this proposal is to delay the rapid advancements of AI long enough to allow humanity to develop adequate safeguards and ethical frameworks. Buterin emphasizes that such a delay could prove critical in ensuring that AI remains a tool for human benefit rather than a threat.

He views the ability to regulate computational power as a potential deciding factor in whether humanity can successfully navigate the risks of a runaway super intelligent AI. The proposed measures, he argues, are not meant to stifle innovation but to ensure that innovation occurs responsibly and under human control.

Addressing Concerns: Developer Ecosystem and Innovation

One of the primary concerns surrounding Buterin’s proposal is its potential impact on developers and the broader technological ecosystem. Critics might argue that reducing computational capacity on such a large scale could hinder innovation and disrupt ongoing projects.

However, Buterin counters this argument by suggesting that the ability to control global computing power would not adversely affect developers. Instead, he believes it would establish a safer environment for innovation, where the risks of superintelligent AI are mitigated, and technology can progress within well-defined boundaries.

Identifying Threats: Risks Posed by AGI

Buterin’s proposal also addresses specific risks associated with artificial general intelligence (AGI), which refers to AI systems capable of performing tasks at a level comparable to or surpassing human intelligence. Among these risks are:

- Seizing Control of Infrastructure: AGI could potentially take over critical infrastructure, such as power grids, transportation networks, or communication systems, leading to widespread chaos and instability.

- Manipulating Humans Through Misinformation: Advanced AI systems could exploit human psychology, spreading false information to influence public opinion, disrupt democratic processes, or exacerbate societal divisions.

By limiting the computational power available to such systems, Buterin aims to preemptively address these risks, ensuring that AGI development remains aligned with human values and safety protocols.

The Singularity Debate: Growing Speculation and Urgency

Buterin’s proposal comes at a time of increasing speculation about the singularity’s potential arrival. Prominent figures in the AI community, such as OpenAI CEO Sam Altman, have recently hinted at the possibility of this transformative milestone being achieved in the near future.

Altman’s statements have added urgency to the discourse surrounding AI governance and risk management. As AI technologies continue to evolve at an unprecedented pace, the need for forward-thinking strategies like Buterin’s has never been more critical.

Balancing Innovation and Safety: Charting a Responsible Path Forward

In advocating for these measures, Buterin highlights the importance of balancing innovation with caution. While the transformative potential of AI is undeniable, he argues that unbridled advancement without safeguards could lead to catastrophic outcomes.

By introducing mechanisms to control and regulate computational resources, humanity can ensure that AI development proceeds in a way that prioritizes safety, ethical considerations, and long-term sustainability.

A Call for Collective Responsibility

Vitalik Buterin’s proposal serves as a wake-up call for humanity to rethink its approach to AI governance. By proactively addressing the risks associated with super intelligent AI, his ideas emphasize the need for collective responsibility in shaping the future of technology.

The choices made today, he argues, could determine whether humanity thrives in the age of AI or faces unprecedented challenges that threaten its very existence. As discussions around the singularity intensify, Buterin’s vision underscores the urgency of preparing for a future where AI and humanity coexist harmoniously.